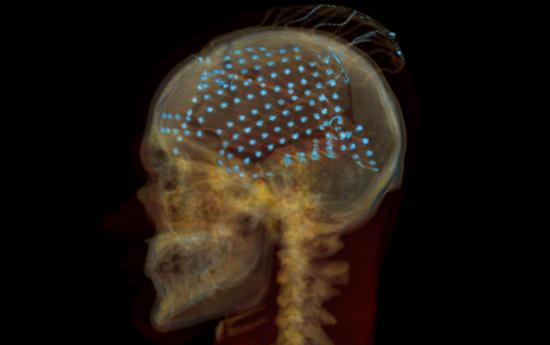

如果说科学家可以读取人的记忆还做的不够的话,那么现在,他们还可以听到它们了。在一项新的研究中,神经科学家将一个电极网络连接到15位患者大脑中的听觉中心(如下图),并记录这些患者在听到诸如“jazz”、“waldo”这样的单词时大脑的活动。

图片来源:Adeen Flinker/UC Berkeley

他们发现,每个词都使大脑中产生了独特的活动模式。他们因此开发出两套不同的计算机程序,通过分析受试者的大脑活动来重建他们所听到的单词。两套程序中工作效果较好的其中一套程序所得到的重建足够科学家们精确译解受试者听到的80%至90%的单词。

因为有证据表明,我们听到的话语和我们所回想或想象的话语所激发的大脑处理活动是相似的,所以该研究表明,将来有一天科学家也许可以“收听”到人的思考,这对那些由于肌萎缩性脊髓侧索硬化症或其他疾病导致无法说话的患者来说是个潜在的利好消息。

Reconstructing Speech from Human Auditory Cortex

Reconstructing Speech from Human Auditory Cortex

Brian N. Pasley, Stephen V. David, Nima Mesgarani, Adeen Flinker, Shihab A. Shamma, Nathan E. Crone, Robert T. Knight, Edward F. Chang

How the human auditory system extracts perceptually relevant acoustic features of speech is unknown. To address this question, we used intracranial recordings from nonprimary auditory cortex in the human superior temporal gyrus to determine what acoustic information in speech sounds can be reconstructed from population neural activity. We found that slow and intermediate temporal fluctuations, such as those corresponding to syllable rate, were accurately reconstructed using a linear model based on the auditory spectrogram. However, reconstruction of fast temporal fluctuations, such as syllable onsets and offsets, required a nonlinear sound representation based on temporal modulation energy. Reconstruction accuracy was highest within the range of spectro-temporal fluctuations that have been found to be critical for speech intelligibility. The decoded speech representations allowed readout and identification of individual words directly from brain activity during single trial sound presentations. These findings reveal neural encoding mechanisms of speech acoustic parameters in higher order human auditory cortex.

文献链接:https://www.plosbiology.org/article/info:doi/10.1371/journal.pbio.1001251